Among many other things, the Fullstory platform provides high fidelity renditions of user sessions so that you can easily diagnose friction and provide the best user experience on your site or app.

However, data ingestion at our scale is no small feat. At Fullstory, we use asynchronous message passing and task queues to feed most of the pipeline that supports session recording. In addition, we also use asynchronous messaging in other services that power things like , Seeing is believing: Generating screen captures from session recordings and data export. In essence, Fullstory is one, big distributed system where tools and services that support async processing mechanisms are a must.

Google Cloud Platform (GCP) is the primary provider for almost all of Fullstory’s infrastructure. Historically, pull queues via Cloud Tasks had been the predominant mechanism for asynchronous message passing at Fullstory. However, early last year Google announced that pull queues were being scheduled for deprecation on October 1st, 2019, forcing us to find a new solution and migrate traffic to a new task queue provider.

In this post, we’ll discuss how we live-migrated traffic across all services away from Cloud Tasks while avoiding disruption to our customers. In addition, we talk about why we chose Pub/Sub, and some lessons learned along the way.

Why Cloud Pub/Sub?

Like Cloud Tasks, Cloud Pub/Sub is a durable, highly-available messaging service for sending messages between applications.

Individual consumers (aka subscribers) can retain messages for up to seven days. In addition, the service is entirely managed by Google Cloud. While there are many offerings in the realm of message passing (Kafka, RabbitMQ, Amazon SNS/SQS), Pub/Sub was the right balance between achieving an expedient migration and maintaining some level of feature parity with Cloud Tasks. This allowed us to minimize the number of service and infrastructure-level changes. Lastly, it was also the solution recommended by GCP.

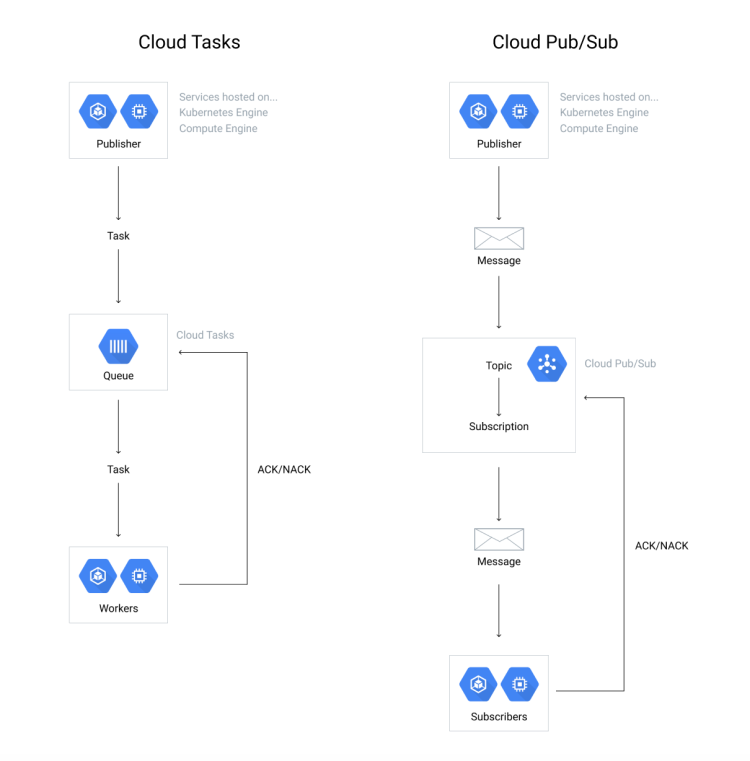

Cloud Pub/Sub passes messages between publishers and subscribers linked by a shared topic. Cloud Task messages are queued until a consumer is ready to take them. Pub/Sub separates the mechanism for enqueuing tasks (i.e. the publisher) and de-queueing tasks (i.e. via the subscription) in GCP. A subscription is used by the application to “pull” messages that were published to the topic. Architecturally, this enables further decoupling of producers and consumers.

In comparison to Cloud Tasks, Cloud Pub/Sub:

Is globally available

Has no upper limit on message delivery rate

However, there are tradeoffs. Cloud Pub/Sub does not offer

Scheduled delivery of tasks

Tracking of delivery attempts

Deduping by message or task name

The Migration Path: From Task Queues to Topics/Subs

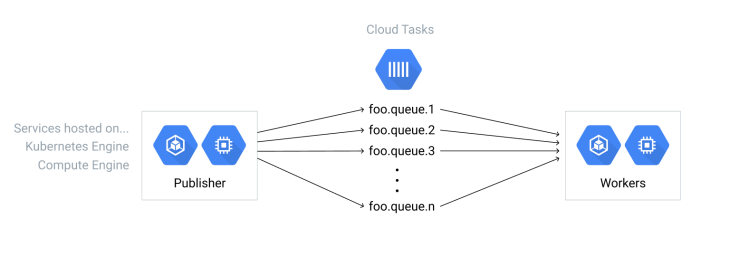

An example of a "conceptual" queue sharded across multiple Cloud Tasks queues.

We opted to mirror our existing infrastructure from Cloud Tasks to Cloud Pub/Sub. For us, this meant each logical queue would have its own topic and a single subscription. If a single logical queue (cloud-task-foo) was sharded across multiple Cloud Task queues (cloud-task-foo-{1, 2, ...}), we did not mirror the sharding mechanism in Pub/Sub.

Historically, we used sharded queues due to the throughput being capped on individual queues in Cloud Tasks. But with PubSub, this is not an issue since there is no upper limit on the number of messages that can be delivered per second.

Our "logical queue" is comprised of a topic and subscription with publishers enqueueing messages to the topic and subscribers pulling messages from the subscription.

Since each “queue” is technically a topic and subscription, this enables more interesting architectures where messages are further decoupled from the process that is actually consuming them. However, for the sake of cutting over, we felt it was easiest not to deviate too far from the established architecture. Keeping the same topology with respect to the existing message queues allowed us to use similar metrics for monitoring during the cutover, namely: task rates and throughput, task handling latencies, failure rates, queue length, and message age.

Messaging Costs

Cloud Pub/Sub messaging costs scale with the size of the message. The upper limit on message size for Cloud Pub/Sub messages is 10MB, compared to only 100KB for Cloud Tasks. With Cloud Tasks, our services were able to pass message content in-band rather than using an external store (such as Cloud Bigtable or Cloud Storage) if they were smaller than the Cloud Tasks message size limit.

Since Pub/Sub allows for even larger messages—in an ideal world—we would not have to change anything. However, Pub/Sub pricing scales with the volume of data transmitted ($40/TiB after the first 10 GiB) as opposed to the number of raw queries. While we could continue passing data in-band, it wouldn’t have been cost effective with our scale.

We created an optimization where message content is passed in-band when the message size is lower than the size limit set by Cloud Tasks. However, if the message size exceeds the limit, the raw message payload needs to be kept out-of-band in another persistent store. Luckily, most services pass data in-band as an optimization rather than a dependency. When attempting to enqueue messages that are too large, we fall back to enqueueing tasks where the bulk of the message content is passed out-of-band. The only change we had to make was to make this the default all the time.

The Cutover

During migration, we first duplicated messages to Cloud Pub/Sub. Tasks enqueued via Cloud Tasks were also published to their corresponding Pub/Sub topics. Messages that originated from Pub/Sub subscription queues were discarded (via immediate ACK) rather than being handled.

There were three benefits at this stage:

It ensured that we had the proper observability (in terms of metrics, monitoring, and logging) needed to ensure a safe migration.

It also allowed us to verify that there were not significant differences in task throughput when sending messages through Pub/Sub.

Finally, it allowed us to ensure the subscriptions were coupled to their associated topics and that services (and their associated service accounts) had the correct IAM permissions to publish and consume messages from the correct topics and subscriptions.

Finally, a cutover was staged for each task queue via a percentage rollout to Pub/Sub. Processes that required Cloud Tasks would start initially at 0.1% directed to Pub/Sub. At this level, we were able to identify latent issues stemming from semantic differences between Cloud Tasks and Pub/Sub (namely panics from bad tasks, missing fields). It took time but once these issues were resolved, traffic was slowly ramped until no traffic was enqueued via Cloud Tasks. Finally, Cloud Task pullers were stopped and all Cloud Task code was removed.

Brief Reflections

When performing live migrations involving managed services, it’s important to completely understand their costs, semantics, and nuances. We were able to curtail cost concerns by recognizing how the pricing model for Cloud Pub/Sub scales with the size of the message (as opposed to the raw number of messages or queries) and adapt our infrastructure to support message passing via Cloud Pub/Sub.

All said, distributed systems are hard. If you’re facing a similar challenge, make sure you leave sufficient time for the inevitable trial and error during cut overs so that your migration goes smoothly.