Today, Fullstory’s production environment contains several large Apache Solr clusters, each comprised of hundreds of identical nodes. We use Solr as an analytics database to power Fullstory’s highly-interactive dashboards, analytics, and search experience. Due to the demands of our particular workloads and scale, we’ve invested significantly in both Solr as an open-source project as well as our own plugins and operations tooling. This is the first post of a two-part series focusing on how Fullstory engineering has approached scaling and stabilizing our Solr clusters.

In this post, I will share how we employed the single responsibility node types to improve cluster stability during peak hours as well as increase the maximum feasible cluster size. In this process, we created the ability to configure several specialized Solr node types, support for which has been contributed to Apache Solr as of the 9.0 release. In the next post, I will discuss how we use a distributed state management protocol to help us restart or shut down Solr nodes efficiently.

Introduction

As a SaaS company serving thousands of customers, we store data for each customer in separate collections, which are similar to SQL tables. Solr collections can be scaled across nodes by breaking up the collection into individual shards. Depending on the size of a customer’s analytics dataset, these collections may contain hundreds or even thousands of shards. As a result, every node in the cluster ends up serving traffic for hundreds of individual shards across collections, and some queries touch every single node in a cluster.

In 2014, we began with a single Solr cluster, having only one type of node. At the time, this node would store and index data, function as a coordinator for distributed queries, and perform state management related tasks for collections. Over time, our Solr clusters have continued to expand and new functionality has been required to scale.

Problems

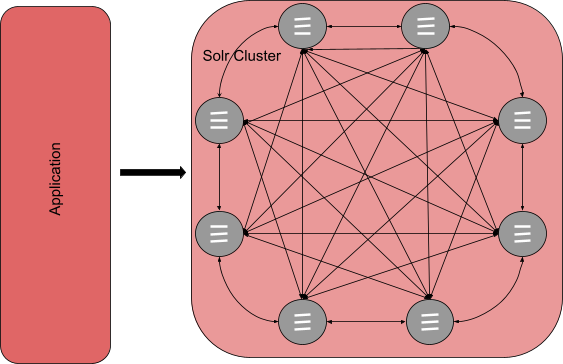

As our customer base grew, we discovered that nodes were experiencing instability during peak hours. In our system, the client application randomly selects a Solr node to send an analytical query request. The chosen node then acts as the query’s coordinator, sending a request to each shard of the collection, aggregating the responses from all the shards, and returning the aggregated response to the application. The diagram below illustrates how each node in the Solr cluster ends up communicating with every other node.

Consequently, the coordinator node becomes a resource-intensive component during each distributed query, consuming a significant amount of resources, including CPU and memory, for several seconds up to tens of seconds. The node also experiences a high rate of young and old garbage collection (GC). This combination eventually leads to problems with responsiveness and stability.

Furthermore, in addition to its query coordination responsibilities, each node is also responsible for collection-related administrative tasks, such as creating new collections, splitting shards to store more data, and moving shards to rebalance the data on each node. These distributed tasks require persistent bookkeeping, but over time, we observed that Solr nodes were not resilient enough to handle these tasks in the event of node outage, unresponsiveness, or rolling upgrades. These disruptions caused corruption of state in Solr clusters where Solr lost track of the leader for a given shard. This required manual intervention—a very cumbersome and error-prone task.

Solution

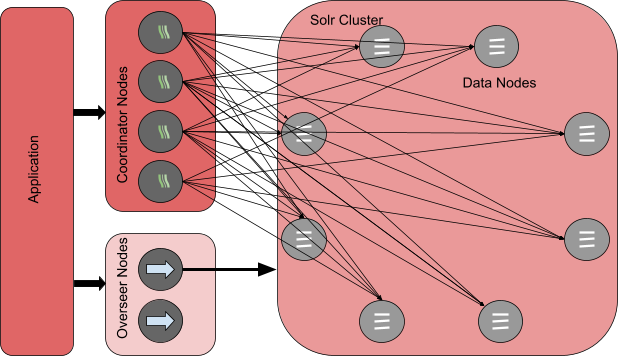

In 2021, to address these issues, we introduced the three distinct types of specialized nodes in the Solr cluster, each with singular responsibility.

The first type is the Data node, which stores and indexes data for a given set of shards. This node type is responsible for serving up results for queries for the individual shards on that node.

The second type is the Query Coordinator node, which serves as the coordinator for distributed queries. With this approach, the client application sends a query to a random Coordinator node, which then becomes the coordinator for that query. This node distributes requests to individual shards residing on Data nodes and then aggregates the results to return a response to the client. This reduces the load of query coordination from all the Data nodes and also decreases the frequency of young and old garbage collection on Data nodes.

The third type of node is the Overseer node, which solely manages the collection-related administrative tasks. An Overseer node orchestrates new collection creation, shard splitting, and shard moving tasks. At Fullstory, we also have another service called Solrman, which ensures a cluster is balanced and collections have the appropriate number of shards. Solrman periodically triggers shard split and shard move tasks based on the current state of the cluster and collections. Now, these administrative tasks are protected from any potential instability in the Solr cluster. The following diagram depicts the different types of nodes in the Solr cluster that helped us achieve stability.

Results

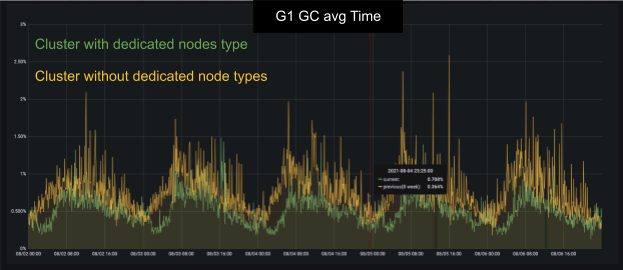

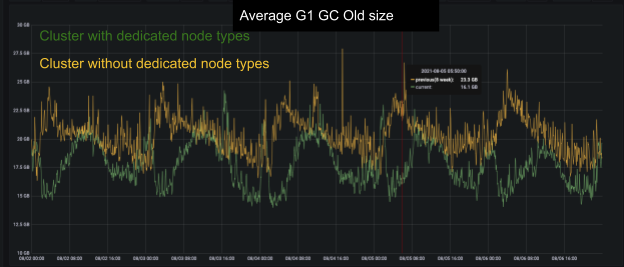

Through our observations, we have noted that the memory or heap profiles of the data node and query coordinator node are distinct. Specifically, data nodes tend to have more long-lived objects, while query coordination generates more short-lived objects that may end up in the old generation. As a result, the creation of separate node types allows us to manage garbage collection behavior in accordance with their respective responsibilities.

The following two metrics graphs show a comparison of G1GC time and average G1GC old size over time with and without the new node role types in the Solr cluster. The y-axis in the first graph represents the G1GC time, while the y-axis in the second graph represents the average G1GC old size. Both graphs show a decrease in these metrics with the presence of query coordinator nodes in the Solr cluster. The x-axis in both graphs represent the time in week.

The green line is with the new node role types provided and the yellow line is without those types provided.

Summary

We started with a single node type in the Solr cluster, where each node had multiple responsibilities. Soon we found that the Solr cluster was not sustaining the load in peak hours. That led us to introduce the three dedicated node types within a Solr cluster:

The Query Coordinator nodes facilitate coordination for the distributed queries. This leads to a decrease in short lived objects in data nodes, resulting in less frequent young and old generation garbage collection and greater stability.

The Overseer nodes are not affected by any cluster load, which makes Solr collection-related admin tasks more resilient.

As a result of these different node types, Data nodes are no longer over-loaded due to varying responsibilities. These changes have helped us make our Solr clusters more stable and we are better able to scale to support future growth.

In the next post, we will introduce another challenge we faced and how we introduced a new Solr collection state management protocol which helps us to restart Solr nodes efficiently.

As an added bonus, we were able to contribute to the Open Source community by adding the different node-type features (data, coordinator, and overseer) to Apache Solr. These node roles are now available in the latest Apache Solr 9.0 release and you can read more about them in the Solr documentation.