Every click, scroll, and interaction is a digital breadcrumb—what we call a behavioral data signal or digital body language—that paints a picture of how users naturally engage with websites and applications. Fullstory captures these moments with precision, transforming fleeting user sessions into actionable insights about customer journeys, frustrations, and successes.

As organizations increasingly turn to machine learning (ML) and artificial intelligence (AI) to optimize user experiences and detect fraudulent activities, the structure and quality of data become paramount. This is where Fullstory Anywhere: Warehouse shines, serving as a comprehensive source of truth for user behavior data. By recording every nuanced interaction, from rage clicks to hesitation moments, Fullstory creates a digital observatory of user behavior across web and mobile applications.

While this rich behavioral data holds immense potential, harnessing it for ML applications presents a unique challenge. Fullstory's data comes in the form of high-volume, complex nested JSON structures, complete with user interactions, network requests, and rich metadata.

In this blog post, we'll explore how to unlock the full potential of this behavioral data goldmine, showing you practical ways to transform raw Fullstory data into structured, labeled datasets perfect for ML, data science, and AI initiatives using data build tool (dbt).

The Fullstory data model & behavioral data labeling

Before exploring data labeling with dbt, it's essential to understand the fundamental structure of Fullstory's behavioral data. When synced to your data warehouse, this data captures a comprehensive array of user interactions in its raw, untransformed state. These behavioral signals include mouse movements, clicks, scrolls, form completions, and other key user interactions, providing a detailed record of how users engage with digital content. Let's examine Fullstory’s native data structure to better understand how these digital interaction points are captured and organized before any transformation takes place.

Fullstory event model

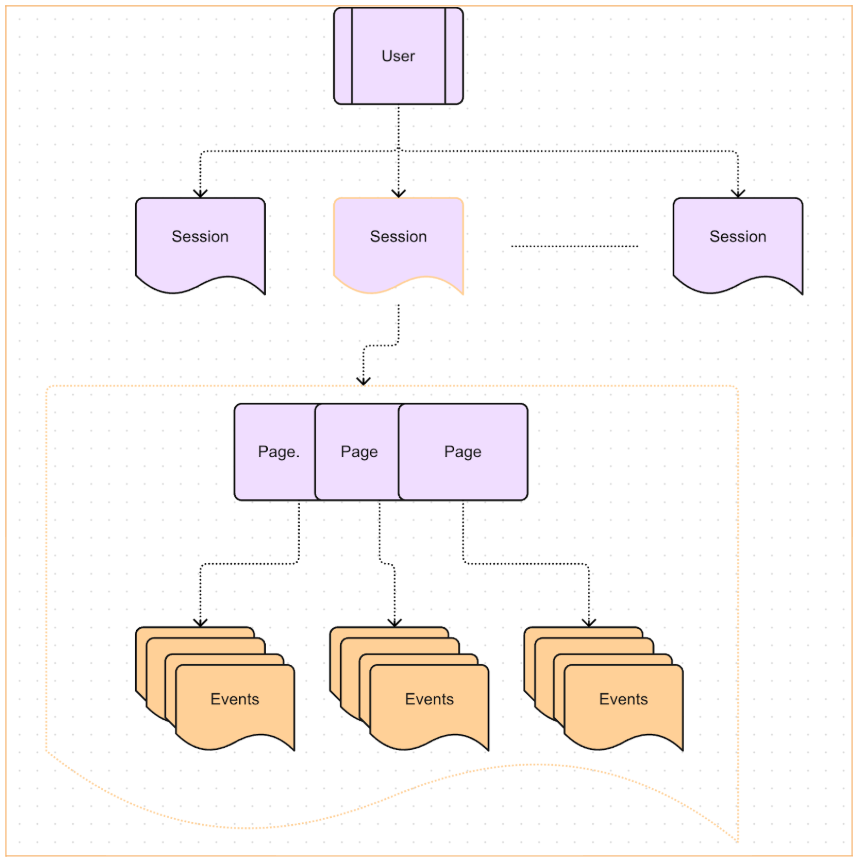

At its core, Fullstory employs an event-centric schema to structure all behavioral data. Every user interaction, such as clicking on a specific element, navigating to a page, or any other discrete action, is recorded as a distinct event with a unique event_id. These events are collected within a Fullstory session, creating a continuous narrative of user behavior. For web applications, each session is tied to a specific user through a device_id, which corresponds to the user's browser cookie. As users navigate through different pages during a single session, each page receives its own view_id, allowing for precise tracking across the entire user journey.

Fullstory enriches each event with two key types of contextual metadata: source_properties and event_properties. The source_properties provide essential context about where and how the event originated, including the source_type that identifies the platform (web or mobile) through which the event was captured. These properties contain valuable contextual data such as geographical location, page information, and device specifications.

Additionally, event_properties are specific to each event_type and capture the unique characteristics of that particular interaction. For example, when a user clicks an element, the event_properties include detailed information about the target element, such as its text content, CSS attributes, and element definition.

This rich metadata structure enables detailed analysis and precise event categorization.

→ Want to dive deeper? You can review the complete data model in our developer docs.

Labelling behavioral data

With a solid understanding of Fullstory's event model and raw data structure, we can now tackle the crucial task of transforming this behavioral data into formats suitable for ML and analytics applications. This transformation process is essential. While Fullstory captures every nuanced interaction, the path from raw events to actionable insights requires thoughtful data labeling strategies.

In the context of supervised machine learning tasks, our goal is to create clear target variables that represent specific outcomes we want to predict or analyze. These outcomes might range from high-value actions like completed purchases to important user behaviors such as successful form submissions or feature adoption. The challenge lies in distilling Fullstory's rich event stream into precise, meaningful labels that accurately represent these user behaviors.

Before diving into specific labeling techniques, we need to consider two fundamental aspects of our labeling strategy: the granularity at which we'll analyze behavior (session vs. user level) and the type of target variable we'll create (binary, categorical, or numeric). These decisions will shape not only our data transformation approach but also the kinds of insights and predictions we can generate from our ML models.

Granularity: We must determine the appropriate level at which to associate our labels. This could be at the user level (aggregating across multiple sessions to understand long-term behavior patterns) or at the session level (focusing on individual user journeys and their immediate outcomes).

Target Variable Type: The nature of our prediction goal determines the type of label we need to generate. This could be:

Binary labels (e.g., did the user complete a purchase: yes/no)

Categorical labels (e.g., user segment types: new, returning, power user)

Numeric values (e.g., total purchase amount, time spent on site)

Understanding these factors is crucial as they influence both our transformation logic and the subsequent ML model selection.

To demonstrate how to effectively label Fullstory data, let's focus on a common binary classification problem: predicting whether a user will make a purchase. In Fullstory, purchase events can be tracked using a custom event (e.g., an event named "Order Completed”), which is typically configured during implementation to capture successful transactions:

Consider the following user sessions:

User 1:

Has two separate sessions

Each session represents a unique visit to the website

User 2:

Has one session

Represents a single visit to the website

In both scenarios, we'll need to be explicit in how the presence of an "Order Completed" custom event affects our labeling strategy.

A. Label at the session level

When labeling at the session level, we identify sessions where a purchase occurred by creating a binary target variable called has_not_purchased. This label will be true if the “Order Completed” custom event appears within a session, and false otherwise.

We evaluate each session independently, regardless of the user's behavior in other sessions. This approach captures the immediate context of a purchase decision rather than the user's overall purchasing history.

In our example scenario:

User 1's first session contains an “Order Completed” event (labeled

true)User 1's second session has no purchase event (labeled

false)User 2's single session includes an “Order Completed” event (labeled

true)

The resulting labeled dataset would look like this:

User Id | Session Id |

|

User 1 | Session 1 |

|

User 1 | Session 2 |

|

User 2 | Session 2 |

|

B. Label through session aggregation

While session-level analysis provides immediate context, sometimes we need to understand user behavior across their entire journey. User-level labeling involves aggregating events across all sessions belonging to a single user, providing a more comprehensive view of user behavior over time.

In our example, we'll create the same binary has_not_purchased label, but now at the user level. A user receives a true label if they have completed a purchase in any of their sessions. This approach captures whether a user has ever converted, regardless of which specific session contained the purchase.

Looking at our scenario:

User 1 has two sessions:

Session 1 contains an “Order Completed” event

Session 2 has no purchase event

Result: User is labeled

truedue to the purchase in Session 1

User 2 has one session:

Session contains an “Order Completed” event

Result: User is labeled

true

The resulting labeled dataset becomes:

User Id |

|

User 1 |

|

User 2 |

|

Implementing data labeling with dbt

Now that we've established our data labeling strategy—both in terms of granularity (session vs. user level) and target variable type—it's time to transform this theoretical framework into practical, executable code. The challenge lies in extracting meaningful signals from Fullstory's rich but complex data structure, where valuable user interaction data is nested within event_properties and source_properties JSON objects.

Rather than wrestling with complex, monolithic queries, dbt enables a modular approach to transforming Fullstory's behavioral data. By breaking down the transformation process into discrete, manageable steps, we can handle everything from event unnesting to session labeling with clarity and precision. This modular approach is particularly valuable when working with Fullstory's rich event data, allowing us to process behavioral signals systematically.

Think of working with dbt as constructing a data pipeline with reusable components called “models”. A dbt model is a SQL or Python script that transforms raw data into a new, usable dataset within your data warehouse, typically by defining a SELECT statement that results in a table or view. You can define one model to handle the initial event processing, another to manage session aggregation, and additional models can create the specific labels we defined earlier—whether at the session or user level. This approach makes it significantly easier to maintain your queries, where you can version control the components of a dbt model, resulting in better testing and data quality assurances.

By combining dbt's transformation capabilities with Fullstory's rich behavioral data, you can confidently build robust data pipelines that turn complex user interactions into structured, labeled datasets ready for machine learning and analytics applications.

Setting up the dbt environment

To begin, you will set up a dbt project locally to demonstrate how to label behavioral data. This process will include setting up a new dbt project, adding a dependency on the dbt_fullstory package, and configuring variables to define your labeling criteria. See Quickstart for dbt Core for a dbt overview if needed.

First, we initialize a new project directory and a Python virtual environment. We use uv for package management, but you can use any tool to set up your virtual environment.

Next, we initialize a new dbt project inside the fsdbt directory. When prompted, you will be asked for a project name and a database. For this example, name the project fs_label and select DuckDB as the database.

dbt init will create a dbt_project.yml file to define the project. We can also use a profiles.yml file to define database connections. Let’s create the profiles.yml file to configure the local DuckDB database.

Inside the profiles.yml file, add the following configuration. This configuration tells dbt to create a DuckDB database named fs_events.db in the current directory when it runs.

Setting up seed data for running the models locally

To start working with data in your example project, we’ll set up something called a “seed.” Dbt seeds are small, static, version-controlled CSV files in your dbt project that are loaded into your data warehouse as tables using the dbt seed command.

We will create a seeds directory in your project and add a CSV file containing your raw Fullstory event data. We’ve created a small sample of events for this tutorial which you can download here: fs_events Alternatively, you can also query your Anywhere Warehouse events table and sample just a few sessions to run this tutorial and export the results in a CSV file.

Copy your dummy Fullstory event data into a CSV file named fs_events.csv and place it in the seeds directory. Then, create a seeds.yml file in the same directory to define the data types for each column in your seed data.

Now, run dbt seed to load this data into your DuckDB database.

This command will create an fs_events.db file in your dbt project root, which contains the fs_events table created from the CSV file you loaded earlier.

You can now use the DuckDB CLI to verify that the table has been created successfully:

At this point, your dbt directory should have the following structure:

Adding dependencies and variables

Before you can start transforming your data, you'll need to add a dependency on the dbt_fullstory package, which provides pre-built models and additional functionality for working with Fullstory data. The open-source package is available at https://github.com/fullstorydev/dbt_fullstory.

Create a dependencies.yml file in your dbt project root (fsdbt/fs_label) and add the package dependency:

Add the following lines to your dependencies.yml:

Run dbt deps to download and install the package.

Next, you’ll need to configure some dbt variables in order for your model to know the location of the events table and how to parse the events. In dbt, variables allow you to define parameters that can be used across multiple models, making your code more flexible and reusable. Add these lines under the vars section in your dbt_project.yml file:

To run this tutorial with DuckDB locally, a few more updates are required to the dbt_fullstory model macros.

Note: when executing this dbt model outside of this tutorial with major data warehouses, you should revert these changes.

On lines 14 and 16 in ./dbt_packages/dbt_fullstory/macros/json_value.sql make the below changes

On line 32 of ./dbt_packages/dbt_fullstory/dbt_project.yml make the below change

At this point, we can apply a model to transform our data. Use the dbt run command to invoke a model. Even though we haven’t configured any models yet, we inherited models from the dbt_fullstory dependency that was installed in a previous step. We can run the below command to ensure we have set up the tables, seed data, and the dependency correctly.

The command should execute without errors, and now you can inspect your tables with DuckDB CLI.

Creating a model for data labeling using modular tables

Now we get to the fun part! You are ready to create the SQL models that will perform the data transformation and labeling. dbt's modular approach allows you to break down complex queries into smaller, more manageable steps. You will create three intermediate models that build on each other, with the final model creating the labeled dataset. We have broken down the whole process into five steps:

1. Intermediate model for valid or qualifying sessions

By convention, an intermediate model is a dbt model that transforms raw data but isn't meant for final, end-user consumption. It serves as a building block in a multi-step transformation pipeline, helping to break down complex queries into smaller, more manageable parts.

Create a new directory and a new SQL file:

cd models && mkdir intermediate && cd "$_" && touch int_valid_sessions.sql

int_valid_sessions table is to identify and isolate "valid" or "qualifying" sessions from your raw Fullstory event data. In this specific example, a valid session is defined as one that contains at least one click on an element with the text "Home".

Add the following SQL to int_valid_sessions.sql:

Explanation:

materialized = "ephemeral": This dbt configuration tells dbt to treat this model as a temporary, in-memory table. It will not be created as a permanent table in your database, but its results will be used by downstream models. See materializations for more information.row_number over(partition by full_session_id…: This SQL code creates a numbered sequence of rows within each session.row_number()assigns a sequential number (1, 2, 3, etc.), partition byfull_session_idgroups the data by session, order byevent_time ascorders events from earliest to latest within each session and assession_start_ascnames this numbered sequence "session_start_asc".{{ ref("dbt_fullstory", "events" ) }}: This dbt function references the events model from thedbt_fullstorypackage, which has already performed some initial transformations on your raw data.{{ var("query_conf")["valid_session"]["query"] | indent }}: This pulls thevalid_sessionquery from yourdbt_project.ymlvariables, allowing you to easily change the filtering criteria in one central place. We will cover this later on in the blog post.

2. Intermediate model for session labels

The second model we will create will flag sessions that contain the target "label event" (in this case, the purchase “Order Completed” event), which you'll use to create your has_not_purchased label.

Create a new SQL file in the models/intermediate directory:

touch int_session_labels.sql

Add the following SQL to int_session_labels.sql:

This model looks almost identical to the previous one, but uses the label_event variable to filter the data. Later in the blog post, we will configure the valid_sessions and label_event variables in dbt_project.yml.

3. Combined intermediate table for has_not_purchased Label

Now we need a model that joins the two previous intermediate models to create the final has_not_purchased label for each session. Remember, int_valid_sessions.sql defined a model for qualifying sessions and int_session_labels.sql defined a model for labeling target events. Joining these tables together allows us to create a table for filtering for both valid sessions and those that had the target event.

Create a new SQL file in the models/intermediate directory:

touch int_labels.sql

Add the following SQL to int_labels.sql:

This model joins the int_valid_sessions and int_session_labels tables to determine if a valid session also contained a purchase event. The has_not_purchased field will be true if a purchase event was found (o.full_session_id is not null) and false otherwise.

4. Define `valid_session` and `label_event` variables

In the previous steps, we templatized the two models int_valid_sessions and int_session_labels using var("query_conf")["valid_session"] and var("query_conf")["label_event"] so that we can pass the valid session and label criteria from our configuration file. Now we need to update the dbt_profiles.yml file to define these variables.

In our case, we are deciding to label valid sessions as those that have at least one click on the ‘Home’ text (see valid_session below). Our label criteria, as mentioned previously, is the custom event “Order Completed” (see label_event below). We are also able to select sessions from a specific time range, as defined by the valid_timerange variable, which is nested to include the start and end. You’ll notice that in the int_valid_sessions and int_session_labels models as well (see (var("query_conf")["valid_timerange"]["start"] and var("query_conf")["valid_timerange"]["end"]).

In the end, your dbt_profiles.yml file should include the following variables (you can edit the values if you haven’t used our seed data).

5. Setting up the final labels table

Now, we can create the final table that contains the labeled sessions. This table will be a permanent table in your database, which you can use for machine learning or other analytics.

Create a new file session_labels.sql in the main models directory:

touch session_labels.sql

Add the following SQL to session_labels.sql:

Explanation:

materialized = "incremental": This dbt configuration tells dbt to build this table incrementally. This is useful for large datasets as it only processes new data since the last run, rather than rebuilding the entire table from scratch. See materializations for more information.incremental_strategy = 'delete+insert': This strategy specifies how dbt handles new data. It deletes existing rows that match the new data and then inserts the new rows, ensuring data freshness and integrity (you can reference the dbt documentation on strategies or DuckDB configuration for more information).

Running the dbt project

With all the models and configurations in place, you are ready to run the fs_label dbt project. At this point, your directory structure should look like this:

There is a dbt compile command that allows you to build the models without executing them. This will create the SQL files under fs_label/target/compiled directory, which you can inspect for correctness. For simplicity, we will only use dbt run, which calls dbt compile under the hood.

This command will execute all your models in the correct order, creating the session_labels table in your DuckDB database:

After the run is complete, you can inspect the final table using the DuckDB CLI:

The output shows that the session_labels table has been successfully created with the has_not_purchased label applied to each session. It is ready for your analytical or machine learning applications!

Unlock the full potential of your data with Fullstory

By following the approach outlined above, organizations can confidently transform Fullstory's behavioral signals into structured, labeled datasets that power machine learning models and analytics initiatives. Whether you're predicting user behavior, optimizing experiences, or detecting patterns, the foundation lies in properly structured and labeled data.

Remember, the key to success lies not just in capturing rich behavioral data, but in transforming it thoughtfully and systematically into formats that serve your analytical needs. With Fullstory's comprehensive data capture and dbt's transformation capabilities, you have the tools needed to unlock the full potential of your digital experience data.